Co-written with Jeanine Brosch, Matt Mast, and Robert Nowadly

During some projects, we work alongside client development teams. However, in some cases, we work amongst a distributed team of developers for increased collaboration. For one such engagement, we were hosting our client’s infrastructure at our site, on our hardware.

Our client relied on us to report back on build and test results. The release process for the software was highly manual and only truly understood by one person, which presented a huge product risk. For several years we recommended moving the hosting outside of our walls, preferably into a cloud environment where the distributed teams could all see the build pipeline. This would also allow the deployment of the software to be automated.

After consulting with our client, we decided to move forward with a Continuous Integration/Continuous Delivery (CI/CD) pipeline in the cloud. Our client preferred Azure because they are a Microsoft shop and had existing Azure infrastructure in other areas of their business.

Our client was interested in exploring Kubernetes and Docker, because they were hot topics in the cloud world, however, we wanted to consider all options in order to help our client reach their ultimate goal of push-button deployment in 2 months.

Our DevOps team learned a few lessons while evaluating solutions:

- Azure container services didn’t support Windows containers.

- Compatibility among versions of Windows containers is whole different blog post we won’t bore you with. Suffice to say, Windows containers aren’t nearly as portable as Linux containers and care needs to be taken when deciding which host operating system they will be run on.

- We tried Docker Swarm, but after 4 hours of struggling with debugging container startup issues, we quickly eliminated that option.

- Kubernetes, a portable, extensible open-source platform for managing containerized workloads and services was the best option. Kubernetes is quickly becoming the de-facto choice for container orchestration. It handles load balancing, autoscaling, ingress routing, and configuration management (among other things). Tooling and documentation for it is also widely available. Helm allowed us to easily set up a reverse proxy / automatic TLS certificate creation without having to implement it ourselves.

So we built the pipeline and everything worked! No, not really. We had to move from:

- A web app normally hosted on the bare metal with an Internet Information Service (IIS) to a Docker container hosted in the cloud.

- Manually provisioned databases with no automation around schema creation, to dockerized databases that bootstrapped themselves when started to back the automated system tests.

- Multiple separately configured application installations for 4 different testing scenarios, to one configurable Docker image to handle each test case.

- Configuration values that lived in flat files not held in source control, to using templated configurations with sensible defaults.

- Multiple background windows services to assist the web application to a consolidated container where all of the services and the web app ran. One of the primary motivations for going this route was that the start order of the services in relation to the application was very specific. There wasn’t time to re-architect the entire application that was developed with a physical server in mind, to support more dynamic connections so we stuck to keeping things simple.

**= CULTURE SHOCK! **

It took the team some getting used to. Before these changes, they didn’t have to worry about how deployable the application was. There was an initial struggle for the team to start thinking with a “DevOps” attitude and structure new code appropriately, but they soon embraced it.

We also moved Jenkins off of our internal servers and into Azure. We utilized a solution template to create the instance with minimal configuration. This solution template provided a lot of quick wins for us including automatically scaling up / down build agents when jobs were fired off, and storing build artifacts in Azure blob storage so other members of the team could easily access them. We did need some control over which virtual machine images were being used by the build agents so we took advantage of Packer to script the creation of these images.

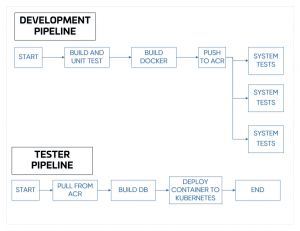

All of the jobs we created were codified and used as part of a Jenkins Pipeline. We created the following jobs to automate the entire process

- A multibranch pipeline job, that runs unit tests and builds the application container – Before this, developers had no way to have Jenkins build / test code on their feature branches

- A job that runs system tests against containers built off of the master branch – Since the build agents are on-demand virtual machines, a level of parallelization was chosen to optimize running time vs cost.

- A job that creates and sets up the schema of SQL databases in Azure to support manual testing. – This reduces the time to get a functioning database setup from weeks to a matter of minutes

- A job that deploys / removes application instances in Kubernetes – This and the SQL database creation job allowed testers to provision their own environments on demand without having to wait for an operations person to go through the hassle of manually setting everything up

Here is a snapshot of the pipeline we created!

All of this was delivered in 2 months!

Build awesome things for fun.

Check out our current openings for your chance to make awesome things with creative, curious people.

You Might Also Like